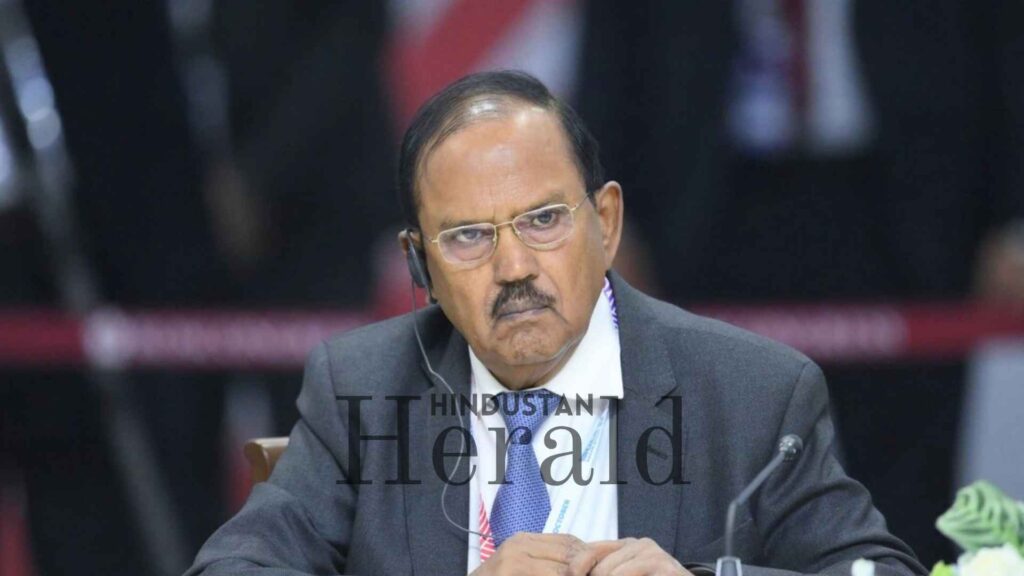

New Delhi, November 19: The video that set off a fresh round of arguments this week was not new, not edited, and certainly not generated by any clever software. It was a plain recording from 2014 of Ajit Doval speaking at an event in Australia. Yet by the time it resurfaced in 2025, a single misheard word had turned it into a political spark plug.

People online claimed the National Security Advisor had said that “more Hindus are attracted to ISIS than Muslims.” Some broadcasters ran that line as fact. Others added a dramatic twist, calling the clip a possible deepfake. By the time fact-checkers stepped in, the confusion had gathered its own momentum.

The truth, as verified now, is much simpler. And much less dramatic.

How ISI Became ISIS And Set Off A Storm

The full lecture, uploaded on March 20, 2014, by the Australia India Institute, shows Doval speaking about cross-border intelligence. At one point, he mentions the ISI, noting that it had “recruited more Hindus than Muslims for intelligence tasks in India.”

That was it. A technical point from a long speech on espionage strategy.

But once clipped and circulated, the reference to ISI morphed into ISIS, a shift that flipped the meaning entirely. According to NewsChecker, this mix-up was at the heart of the viral misunderstanding. It pushed the old lecture into a conversation it was never intended for, and framed Doval as commenting on radicalisation instead of intelligence history.

By the time the distinction reached the public, the misquote had already travelled much farther than the correction.

A Denial That Added Another Layer

The controversy deepened on November 17, when Doval publicly said he had never made the alleged ISIS remark. Speaking to reporters, he suggested that the clip circulating online could be a deepfake and warned about AI being used to damage public trust.

According to Moneycontrol, Doval treated the moment as an example of how synthetic media could threaten national security. Coming from someone in his position, the statement carried weight. It also created a new puzzle for the public. If the NSA called it fake, was the clip truly from 2014 or not?

The denial, intended to put the matter to rest, ended up expanding the uncertainty.

Fact-Checkers Bring The Discussion Back To Ground

Within two days, the claim of deepfake manipulation began to collapse under scrutiny. Teams from BOOM Live, NewsChecker, and Alt News analysed the footage, comparing it to the original upload and running it through forensic tools.

Their findings lined up clearly.

The clip was authentic.

The voice was natural.

The video frames showed no tampering.

BOOM reported that neither the audio nor the visuals exhibited signs of AI-generated content. NewsChecker stressed that the only real problem was the misinterpretation of ISI as ISIS. Alt News confirmed that the resurfaced clip matched the 2014 lecture exactly.

Once those conclusions were released, the deepfake narrative began to fade. But the fact that it had gained traction so quickly says something important about today’s information environment.

A Media Misstep That Made Everything Worse

The episode is not just about technology. It is also about how quickly a mistaken report can snowball.

When outlets repeated the incorrect quote without verifying the original lecture, they unintentionally strengthened the false narrative. A misheard word became a headline, and a clipped video became the basis for sweeping claims about religious radicalisation.

According to NewsChecker, this early misreporting played a major role in the confusion that followed. Once the claim reached social media, it became almost impossible to control its direction. Every repost stripped away more context, and the gap between the viral claim and the archival reality widened.

For anyone in the media, the incident is a reminder of why older footage must be checked at the source before being repackaged for a new audience.

Doval’s Warning Still Strikes A Nerve

Although his deepfake suspicion was incorrect in this case, Doval’s concern is not misplaced. India is entering a period where doctored clips can appear convincingly real. Election campaigns, diplomatic messaging, even emergency responses could be disrupted by manipulated media that looks authentic on first glance.

When the public begins doubting even genuine footage, every piece of video can become a potential dispute. That uncertainty by itself can be a threat.

This incident showed how fast doubt can spread. A real clip from 2014, available openly online, was questioned widely because the public was already primed to expect digital manipulation. If an authentic video can fall under suspicion so easily, the challenge ahead is serious.

One Missing Clarification From The NSA’s Office

Despite fact-checkers settling the matter, Doval’s office has not released any updated clarification confirming the 2014 context. His denial remains the only official remark on record, and it does not account for the original lecture or the ISI reference.

That silence leaves an awkward gap. The public has the facts, but not a clean acknowledgment from the senior-most security official involved. A brief statement would have closed the loop and prevented lingering speculation.

Still, this is a familiar pattern. Misinformation spreads quickly. Corrections arrive late. Institutional communication rarely keeps pace with public confusion.

A Snapshot Of India’s Information Vulnerability

This controversy, small as it might seem in the larger political landscape, reveals something telling about India’s media climate. Archival clips are regularly pulled into present-day arguments. Social media rewards speed instead of accuracy. And once a narrative gains velocity, facts arrive like an afterthought.

In this case, a misunderstanding triggered a national-level discussion, a high-ranking denial, and a wave of fact-checks. All that effort to confirm something that had never been in doubt: that the video from 2014 was genuine, and that the context had simply been lost.

Where Everything Lands Now

The situation is finally clearer.

The video is from 2014.

The remark refers to the ISI, not ISIS.

There is no evidence of deepfake manipulation.

What remains is a small but telling example of how fragile public understanding can become when misinformation, resurfaced clips, and fears around AI intermingle. The episode will pass, but the vulnerabilities it exposed will continue to shape how India navigates digital information in the years ahead.

Stay ahead with Hindustan Herald — bringing you trusted news, sharp analysis, and stories that matter across Politics, Business, Technology, Sports, Entertainment, Lifestyle, and more.

Connect with us on Facebook, Instagram, X (Twitter), LinkedIn, YouTube, and join our Telegram community @hindustanherald for real-time updates.

Covers Indian politics, governance, and policy developments with over a decade of experience in political reporting.